9.12. Predicting Pizza Prices - Linear Regression¶

Linear regression is probably one of the most widely used algorithms in data science, and in many other applications. One of the best things about linear regression is that it allows us to use observations and measurements of things we know to make predictions about new things. These predictions might be about the likelihood of a person buying a product online or the chance that someone will default on their loan payment. To start, we are going to use an even simpler example of predicting the price of pizza based on its diameter.

We conducted an extensive study of the pizza places in one neighborhood, and here is a table of observations of pizza diameters and their price.

Diameter |

Price |

|---|---|

6 |

7 |

8 |

9 |

10 |

13 |

14 |

17.5 |

18 |

18 |

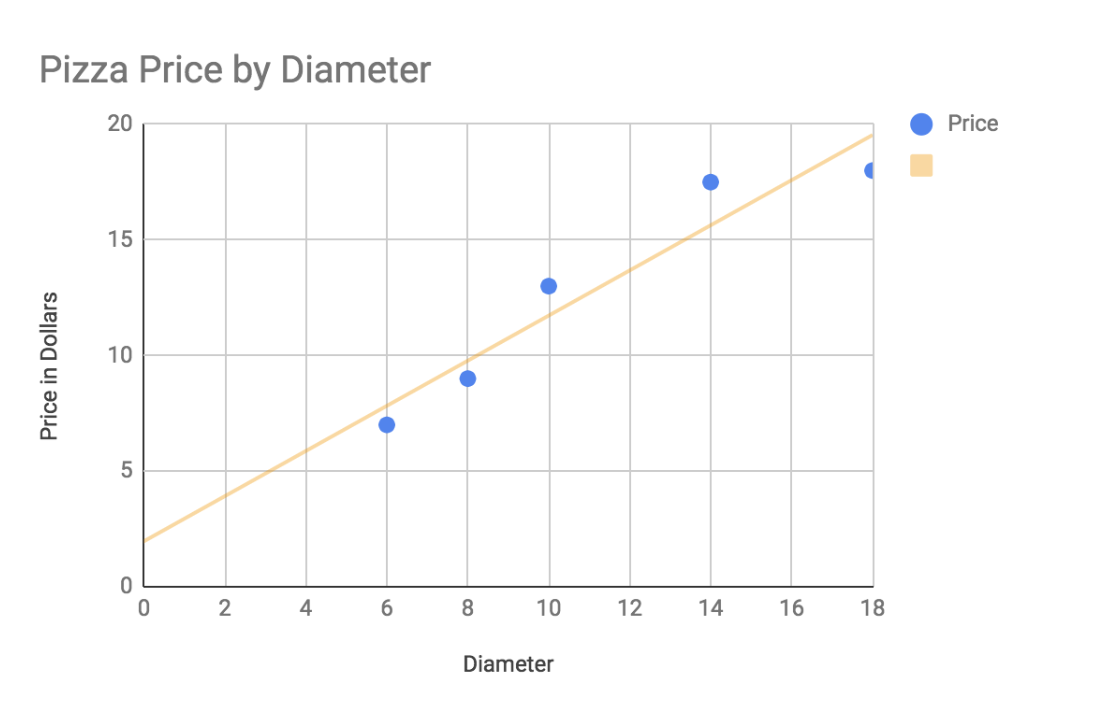

Your first task is to put the data into a spreadsheet and make a scatter plot of the diameter versus the price. What you can see pretty easily from this graph is that as the diameter of the pizza goes up, so does the price.

If you were to draw a straight line through the points that came as close as possible to all of them, it would look like this.

The orange line called the trendline or the regression line is our best guess at a linear relationship that describes the data. This is important because we can come up with an equation for the line that will allow us to predict the y-value (price) for any given x-value (diameter). Linear regression is all about finding the best equation for the line.

There are actually several different ways we can come up with the equation for the line. We will look at two different solutions: one is a closed-form equation that will work for any problem like this in just two dimensions. The second is a solution that will allow us to generalize the idea of a best-fit line to many dimensions!

Recall the equation for a line that you learned in algebra: \(y = mx + b\). What we need to do is to determine values for m and b. One way we can do that is to simply guess, and keep refining our guesses until we get to a point where we are not really getting any better. You may think this sounds kind of stupid, but it is actually a pretty fundamental part of many machine learning algorithms.

You may also be wondering how we decide what it means to “get better”? In the case of our pizza problem, we have some data to work with, and so for a given guess for m and b we can compare the calculated y (price) against the known value of y and measure our error. For example, suppose we guess that \(b = 5\) and \(m = .8\) for a diameter of 10 we get \(y = 0.7 x 10 + 5\), which evaluates to 12. Checking against our table, the value should be 13, so our error is our known value minus our predicted value \(13-12 = 1\). If we try the same thing for a diameter of 8 we get \(y = 0.7 x 8 + 5\), which evaluates to 10.6. The error here is 9 - 10.6 = -1.6.

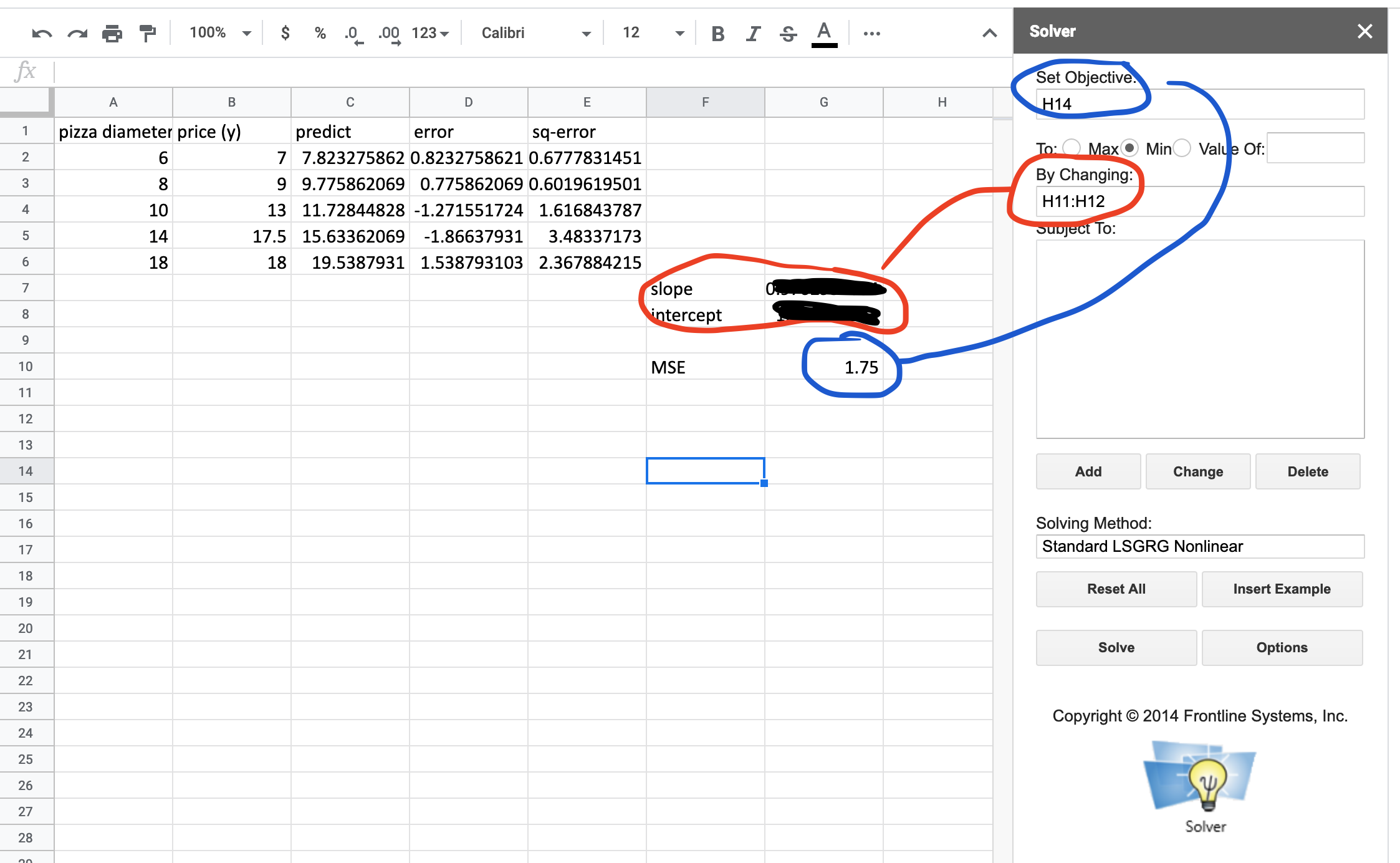

Add a column to the spreadsheet that contains the predicted price for the pizza using the diameter as the x-value and using a slope of 0.7 and intercept of 5. Now, plot the original set of data along with this new table of data. Make the original data one color and your calculated table another color. Experiment with some different guesses for the slope and intercept to see how it works.

Next, let’s add another column to the table where we include the error. Now we have our ‘predicted values’ and a bunch of error measurements. One common way we combine these error measurements together is to compute the Mean Squared Error (MSE). This is easy to compute because all we have to do is square each of our errors, add them up and then divide by the number of error terms we have.

Why do we square them first? Well, did you notice that in our example, one of the errors was positive and one was negative? But if we add together both positive and negative numbers they, can cancel each other, out making our final mean value smaller. So we square them to be sure they are all positive. We call this calculation of the MSE an objective function. In many machine learning algorithms, our goal is to minimize the objective function. That is what we want to do here: we want to find the value for m and b that minimizes the error.

Add two cells to your spreadsheet where you can try different values for the slope and intercept. Also, update the column where you compute a value for the price to use the values from these cells rather than the hardcoded values of 0.7 and 5.

Q-1: Using a slope of 2.5 and an intercept of 0.5, what is the MSE?

Now let’s make use of the Solver functionality to search for and find the best values for the slope and intercept. Make sure that you have the Frontline Systems Solver add-on installed for Google Sheets. If you haven’t used Solver before, you may want to take a look at Optimization Using Solver. Setting up Solver for this problem doesn’t even have any constraints. What we want to do is minimize the MSE, by changing the values for slope and intercept. Note that because we are squaring the errors, this is a non-linear problem and will require the Standard LSGRG Nonlinear solver. Now, set up the solver and run it for the pizza problem.

Q-2: Fill in the values Solver found for the slope and intercept .

If you are having any trouble, your setup should look like this.

9.12.1. Closed-Form Solution¶

The closed-form solution to this problem is known to many science students.

Let’s use the closed-form solution to calculate values for the slope and intercept. To do this, you will need to calculate a value for \(\bar{x}\) and \(\bar{y}\) (the mean value for x and y respectively). You can add two columns to do the calculation of \(y_i - \bar{y}\) and \(x_i - \bar{x}\).

Q-3: What values do you get for the slope and intercept ?

9.12.2. The Payoff - Supervised Learning¶

The payoff from this exercise with Solver is that we have “learned” values for the slope and intercept that will allow us to predict the price of any pizza! If your friend calls you up and says “I just ate a 7-inch pizza, guess how much it cost?”, you can quickly do the math of \(1.97 + 0.98 x 7\) and guess $8.83! Won’t they be amazed?

In the world of machine learning, using the sample data for pizza along with a solver-like algorithm for finding the values for the slope and intercept, are called supervised learning. That is because we are using the known values for the prices of different pizzas along with their diameters to help correct our algorithm and come up with a value for the slope and intercept. The values that the algorithm learns are called our model. This model is pretty simple because it just uses two numbers and the formula for a line. But don’t let the simplicity fool you, regression is one of the most commonly used algorithms in a data scientist’s arsenal.

In the next section, we’ll make a fancier model that uses more data to do a better job of making predictions. If you want to try your hand at writing your own learning algorithm, you can do that in the optional section below.

9.12.3. A Simple Machine Learning Approach (Optional)¶

Pick a random value for m and b.

Compute the MSE for all our known points.

Repeat the following steps 1000 times.

Make m slightly bigger and recompute the MSE. Does that make the MSE smaller? If so, use this new value for m. If not, make m slightly smaller and see if that helps.

Make b slightly bigger and recompute the MSE. Does that make the MSE smaller? If so, use this new value for b and go back to step 3a. If not, try a slightly smaller b and see if that makes the MSE smaller. If so, keep this value for b and go back to step 3a.

After repeating the above enough times, we will be very close to the best possible values for m and b. We can now use these values to make predictions for other pizzas where we know the diameter but don’t know the price.

Let’s develop some intuition for this whole thing by writing a function and trying to minimize the error.

You will write three functions:

compute_y(x, m, b)compute_all_y(list_of_x)which should usecompute_ycompute_mse(list_of_known, list_of_predictions)

Next, write a function that systematically tries different values for m and

b to minimize the MSE. Put this function in a for loop and

iterate 1000 times. See what your value is for m and b at the end.

Congratulations! You have just written your first machine learning algorithm. One fun thing you can do is to save the MSE at the end of each time through the loop, then plot it. You should see the error go down pretty quickly, then level off or go down very gradually. Note that the error will never go to 0 because the data isn’t perfectly linear. But nothing in the real world is!

At this point, your algorithm’s ability to learn is limited by how much you change the slope and intercept values each time through the loop. In the beginning, it’s good to change them by a lot but as you get closer to the best answer, it’s better to tweak them by smaller and smaller amounts. Can you adjust your code above to do this?

For two-dimensional data, there is even a closed-form solution to this problem that one could derive using a bit of calculus. It is worthwhile to do this to see that their solution is very close to the solution you get from a simple formula that \(slope = covariance / variance\) and \(intercept = \bar{y} - slope * \bar{x}\). Write a function that will calculate the slope and intercept using this method, and compare the slope and intercept with your previous error.

Lesson Feedback

-

During this lesson I was primarily in my...

- 1. Comfort Zone

- 2. Learning Zone

- 3. Panic Zone

-

Completing this lesson took...

- 1. Very little time

- 2. A reasonable amount of time

- 3. More time than is reasonable

-

Based on my own interests and needs, the things taught in this lesson...

- 1. Don't seem worth learning

- 2. May be worth learning

- 3. Are definitely worth learning

-

For me to master the things taught in this lesson feels...

- 1. Definitely within reach

- 2. Within reach if I try my hardest

- 3. Out of reach no matter how hard I try